We are conditioned to think AI must be instant. We type a request, hit enter, and expect the text to stream out immediately. If the cursor hangs for ten seconds, we assume the model has crashed.

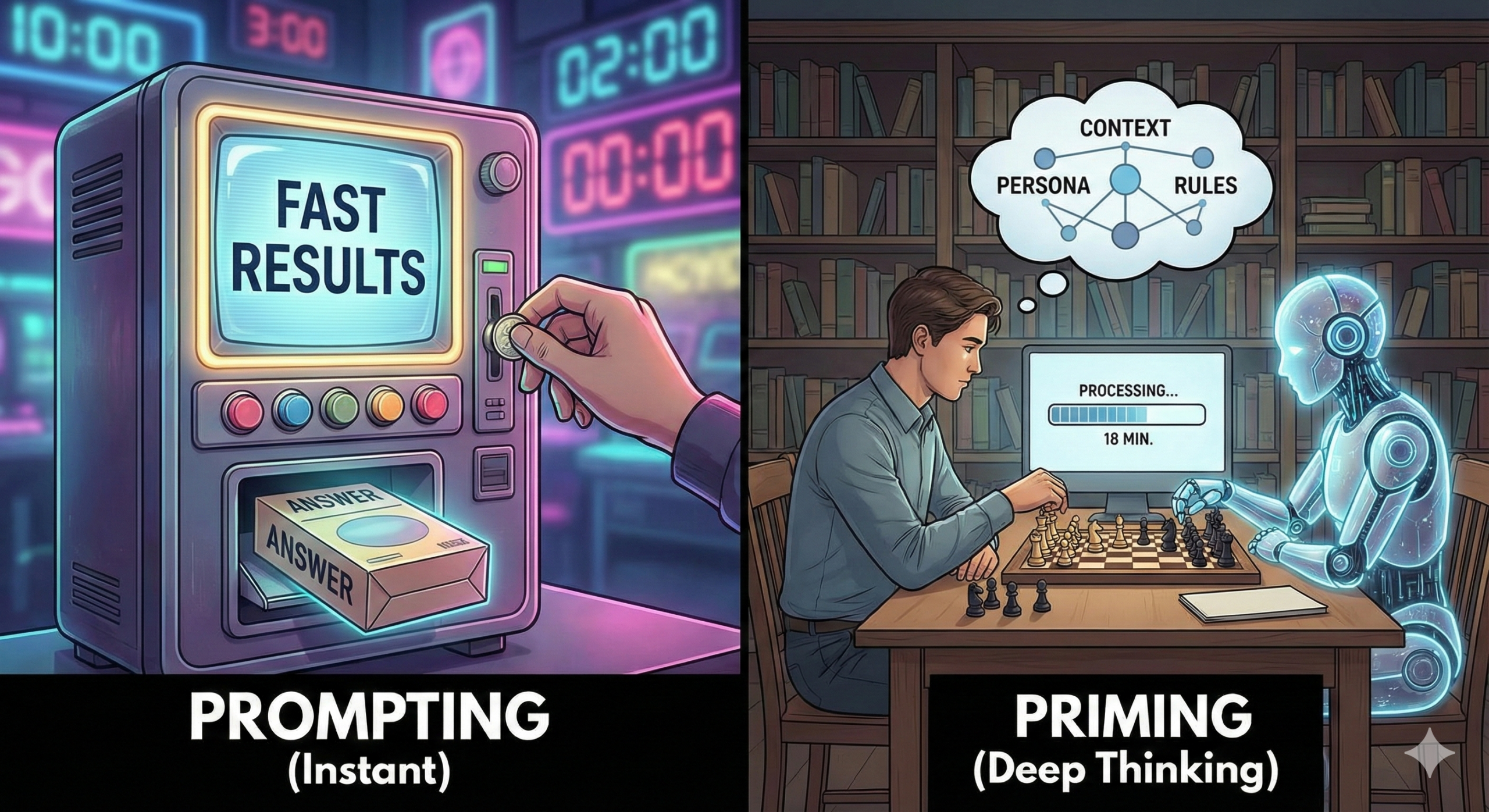

This is the Culture of Instant Gratification. We have spent three years treating AI like a Vending Machine. Insert coin, get snack. With a well-written prompt, this can work well for simple queries. But complex problem solving benefits from a different approach.

A fascinating story surfaced from the latest updates involving OpenAI and a graduate-level physics problem about black hole symmetries.

-

The Instant Attempt: When the model was forced to answer quickly, it failed. It gave a confident but wrong answer: “No symmetries found.”

-

The “Thinking” Attempt: Then, they tried again using the new “Thinking” protocols. It didn’t answer in ten seconds. It didn’t answer in two minutes. It “thought” for 18 minutes.

The result? It produced a novel, correct mathematical proof that the human expert admitted was at the very edge of their own capabilities.

This marks a massive shift for us as users. We are moving from an era of Search to an era of Reasoning. To survive this shift, we need to stop just “prompting” and start “priming.”

“Wait, isn’t Priming just a long prompt?”

No. Confusing the two is why most people get average results.

A “Thorough Prompt” is a data dump. You write a massive paragraph that includes your persona, your background info, your tone guidelines, and your question, all at once. You hit enter and cross your fingers.

-

The risk: The AI gets “cognitive overload.” It tries to process the context and generate the answer simultaneously. Often, it latches onto the last thing you said (recency bias) and ignores the nuance in the middle.

“Priming” is a workflow. Priming creates a pause between the context and the work. It separates “understanding” from “execution.”

-

How it looks: You feed the AI the context, including the persona, the data, and the rules. Crucially, you don’t ask it to do the work yet. You simply ask it to confirm it understands.

-

The result: The model loads the context into its active memory before it starts generating tokens for the answer.

The “Thinking” Strategy

The reason the OpenAI model succeeded on the physics problem after 18 minutes is that it effectively primed itself. It spent that time exploring the problem space and setting its own parameters before committing to a final answer.

In 2026, you have two choices:

-

Keep Prompting: Dump everything in one box, hit enter, and get a fast, hallucinated answer.

-

Start Priming: Establish the context, wait for the “nod” from the AI, and then ask the question.

The best answers to very complex questions won’t be instant. They will be the ones where both you and the AI took the time to prepare.